Writing with integrity in the AI era

A free reflection worksheet for writers who love readers

AI is so thorny, isn’t it?

We’re all navigating this new territory, and it’s become…difficult. AI tools promise speed and less work, and it’s tempting to turn to them when we’re just trying to stay on top of the week. And it’s so cool what these tools can do, even when they do it badly. Isn’t this what the future was supposed to be?

But the speed of adoption leaves us trying to catch our breath. Last year, I could pick up on AI-generated language after reading only a sentence, and I laughed myself silly at my image result when I asked it to produce an illustration of me (check it out below!), but I’ve seen its capabilities improve month over month, especially as users become better. Have you seen this?

I meet people every day in my business circles who already rely heavily on AI tools. I’ve been experimenting with ChatGPT, too. I want to see how far I can push it in different tasks: in analysis and summary, as a brainstorming or revision partner, as devil’s advocate or cheerleader. It’s incredible to watch how quickly a tool like ChatGPT can analyze and reorganize information. (Go ask it to sort, categorize, and prioritize information into a table and it’s done in seconds. I find myself exclaiming aloud when I see it done in seconds.) Earlier this year, I even built my own AI tool to see if it could mimic my writing voice — after a few months, it’s getting better, but we argue still over emojis and overly dramatic language. (I argue with it a lot!)

No turning back

In my work as book coach and editor, I know this to be true: Writers are using AI regularly. Tools like ChatGPT, Claude, and Perplexity have become writing and revision partners, copy editors, illustrators, and more. It worries me, because I have seen well-meaning people put forward poor AI-generated output…but I also have seen clever use of it. One writer used AI to push her further in her writing than she was inclined to go herself – to deepen her work. Each week, she took my feedback, did her work, analyzed it with AI, and revised it some more. She figured out how to use it to her advantage to produce original work, and it was fast and powerful as a revision partner. With the right prompts and setup, it’s like a young intern in the office — really eager to please, completely certain in its knowledge, not always accurate but confident. Still doesn’t make the coffee, though.

As excited as many of us are by these tools, a lot of us are still grappling with our feelings around AI. It feels like cheating to take credit for work done by a bot; it feels ethically wrong to use tools that were built on the work of people who weren’t compensated; by relying on AI tools, it feels like we’re devaluing the skills of millions of people whose careers will be eliminated in a matter of…months? And the impact on climate change is worrying.

I feel like I’m being pushed up against our values every time I use the technology. Some days, I feel it so strongly that I want to call my discomfort moral injury — because but I constantly have to choose between poor choices, and that’s exhausting.

In my community of writers and readers, we know the heart of our writing isn’t about algorithms or automation. There’s a human being on the other side of the page. The books we love are deeply connected to our hearts and minds. Last week, I was in the kitchen, my hands full of dough, listening to the audiobook version of Frederik Backman’s My Friends when unexpected tears pooled in my eyes and I almost bent over with heartache (the good sort) when Backman dropped a truth bomb into the narration. No bot could do that. Only a human.

It’s about human connection. How do we protect that?

What matters?

So I’ve been listening and thinking about how to move forward with AI, in my business, and in my own writing. Everyone has an opinion about it right now, and conversations are happening daily in my circles. Here is where I am right now: This conversation about AI and writing doesn’t have to be about fear. You don’t need to pick a side or throw out your intuition. You just need to know:

What matters to you

Who you're writing for

What kind of decisions will keep you in integrity with both

Yeah, that’s a lot. But I’m weary of the constant vigilance, and I think we all are. So I wanted to create something for all of us who want to be thoughtful and ethical navigating this exact moment. And because my intern was sitting there not making coffee, I asked it to help.

It’s called “AI & Reader-Centered Writing: A Reflection Sheet for Writers Who Love Readers.”

I wanted it to be a place where writers can document their excitement and their worries.

I wanted it to be judgement-free.

I wanted it to be practical.

I wanted it to be a starting point and a living document.

And I wanted it to focus on reader-centered writing.

If you’ve felt unsure about how to ethically and intentionally use AI or writing tools in your work, go ahead: Download it and use it. Click on the button below and you’ll be prompted to make a copy of the document in Google Drive. You can fill it out in Google Docs or download a copy to use in your preferred program.

We can be clear, compassionate, and courageous writers – with or without AI tools. Use the reflection sheet to decide where to set your own AI boundaries—based on your reader, your voice, and your values. Let me know how it goes.

PS. I’m back in a few days with a new Readability Book Review. And coming soon: Canadian Book Coaches share our top book picks in time for Canada Day.

Summer’s here!

One more thing! I have a summer writing tip series running on Wednesdays on my socials. You can find my “Lawn Chair Coaching” tips LinkedIn, Bluesky, Facebook and Instagram. Below is a sneak peek at tomorrow’s tip.

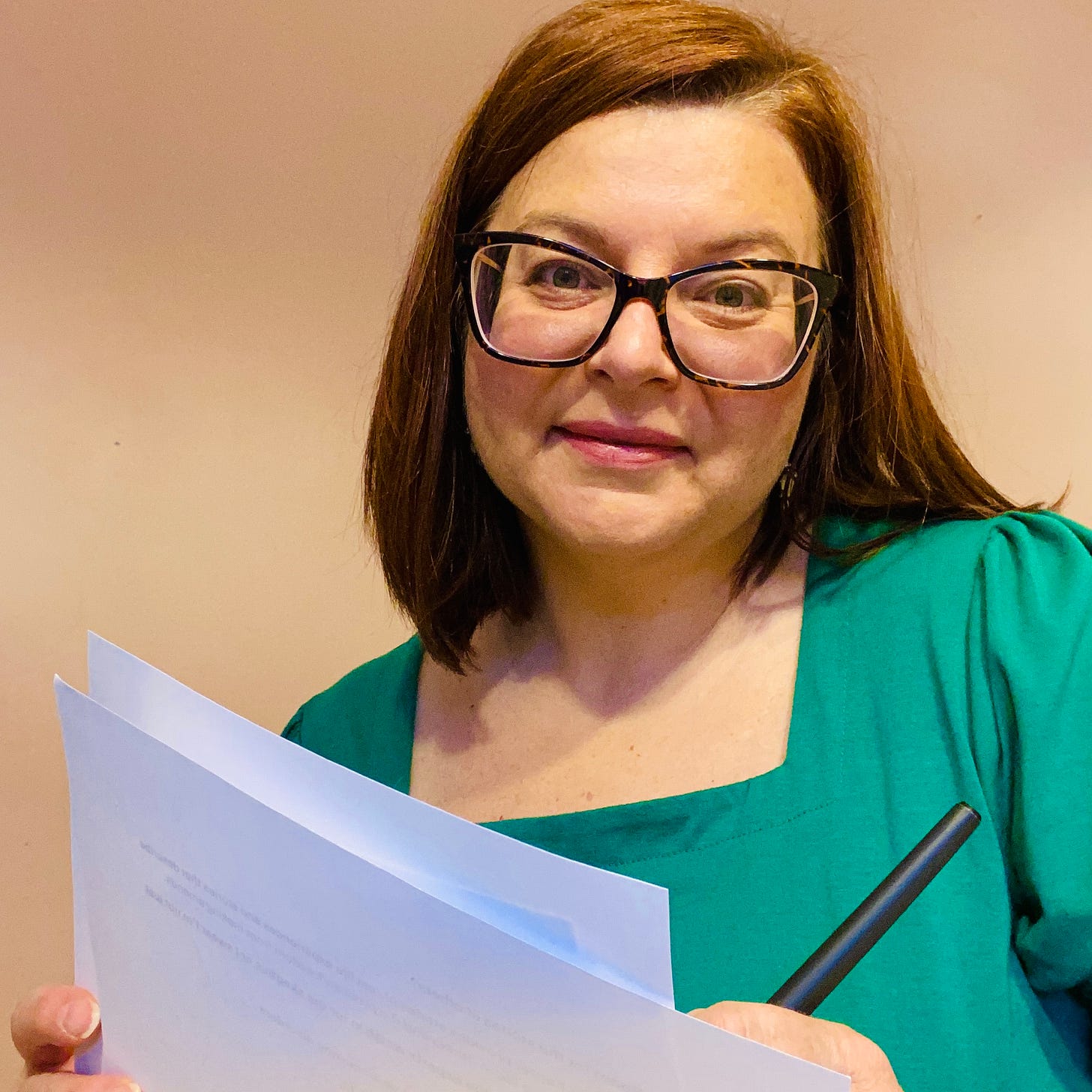

About Dinah • I’m a book coach and I work with writers who want to inspire change by sharing their experience and know-how. I help them write big-hearted books by supporting accountability, mindset, and craft. If that sounds like you, get in touch to ask about book coaching and if it will help you.